AI commerce in 2026 will be defined by a single question: will consumers let AI assistants actually shop for them? As assistants move beyond research into delegated purchasing, trust becomes the limiting factor. The winners won't be platforms with the best models, but those that earn repeated permission to act. This requires three trust pillars: alignment (does the agent act for me?), control (can I set meaningful constraints?), and accountability (is it easy to fix mistakes?). Trust isn't abstract—it shows up in measurable behavior signals across search, social, and retail ecosystems.

AI in commerce is moving past the novelty phase.

For a while, “AI shopping” meant research help: summarize reviews, compare options, shortlist products. Useful, but the consumer still did the buying.

Now the ambition is different. Assistants are being built to act, not just advise.

That shift is the real story for 2026, because the moment an AI can place an order, choose a substitute, or surface a sponsored product mid-conversation, the limiting factor stops being capability and becomes something far more human:

Trust.

In 2026, the winners in AI commerce will not simply be the platforms with the best models. They will be the ones that earn repeated permission to act.

Agentic commerce refers to AI assistants that execute purchases on behalf of users, not just recommend products. Unlike traditional e-commerce where consumers browse and buy, or use AI to help with research, agentic commerce involves delegation. The AI has permission to complete transactions based on learned preferences and constraints.

Delegated purchasing is the core behavior shift: consumers authorize an assistant to make buying decisions within defined parameters (budget, brand preferences, delivery requirements) without requiring approval for each transaction. This represents a fundamental change from oversight to trust-based automation.

A handful of ecosystem moves have made the direction clear:

None of this guarantees behavior change by itself, but it sets up the central hypothesis:

2026 will be the year AI commerce becomes a trust problem.

As AI becomes an agent, consumers will accept less oversight only when three things feel true.

This becomes the pressure point as sponsored placements move into conversational interfaces. People will tolerate ads in discovery. They will not tolerate a sense that the agent is spending their money based on someone else's incentives.

Or spending £90 when £8 would do.

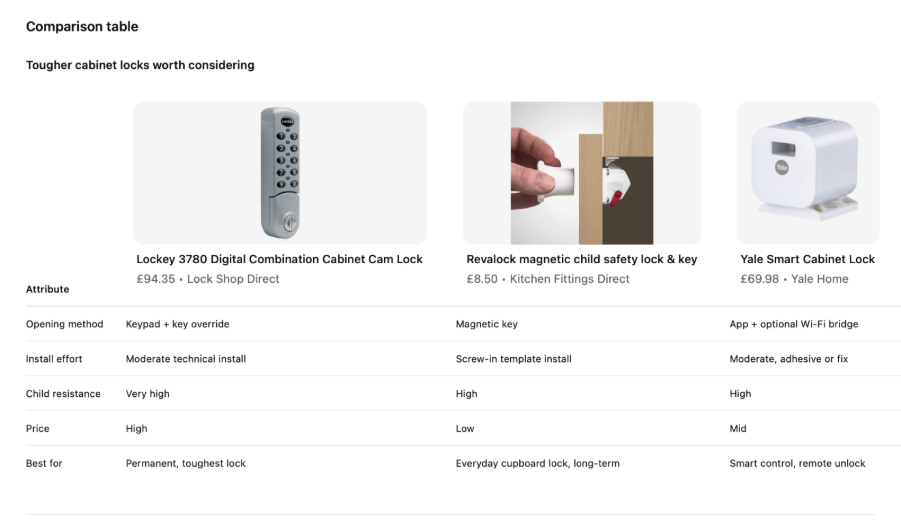

Here's what misalignment looks like in practice: I recently asked an AI assistant for child safety locks for cupboards. The top recommendation was a £94.35 professional-grade digital combination lock with keypad entry—serious hardware designed for permanent security installations. What I actually needed was the £8.50 magnetic lock in the middle of the comparison to keep my pesky toddler out of the cupboards.

The assistant had prioritized either the highest-margin product, the most feature-rich option, or whatever ranking algorithm was driving visibility. What it didn't do was understand my actual use case. That's an alignment failure.

In low-stakes categories like this, the cost is mild annoyance and a quick manual correction. But scale this behavior to weekly grocery shops, medication reorders, or household essentials. Misalignment becomes the reason people won't delegate. Every time an assistant surfaces something that feels wrong—whether due to sponsorship, margin optimization, or poor contextual understanding—it resets trust and pushes users back into verification mode.

Alignment isn't about perfection. It's about whether the consumer believes the agent's defaults match their priorities, not someone else's revenue model.

The promise of agentic commerce is convenience through delegation. But delegation without control feels reckless, not helpful.

Control isn't about adding steps back in. Budget caps, brand preferences, delivery priorities, substitution rules, approval thresholds. If people can define the rules, they can delegate without feeling irresponsible.

The platforms that win won't just offer on/off switches for delegation. They'll offer graduated control: the ability to define rules at the category level, set spending limits by context, specify substitution preferences (never swap brands for this, always choose cheaper for that), and adjust approval thresholds based on price or product type.

The behavioral signal of inadequate control is high edit rates. When users repeatedly modify suggested baskets, adjust recommended purchases, or abandon delegation flows to manually verify details, it means the constraints don't match their mental model of acceptable risk.

Is it easy to unwind and clear who is responsible?

Returns, refunds, delivery issues, incorrect items: these are normal commerce events. If accountability is vague between the assistant, retailer, and payment provider, people will keep oversight high and delegation will stall.

In a traditional journey, consumers build trust through effort. They browse, cross-check, and validate.

In an agentic journey, trust has to be designed, because the consumer has deliberately removed steps.

Agentic commerce does not remove friction. It relocates it.

The friction moves from browsing to trust decisions.

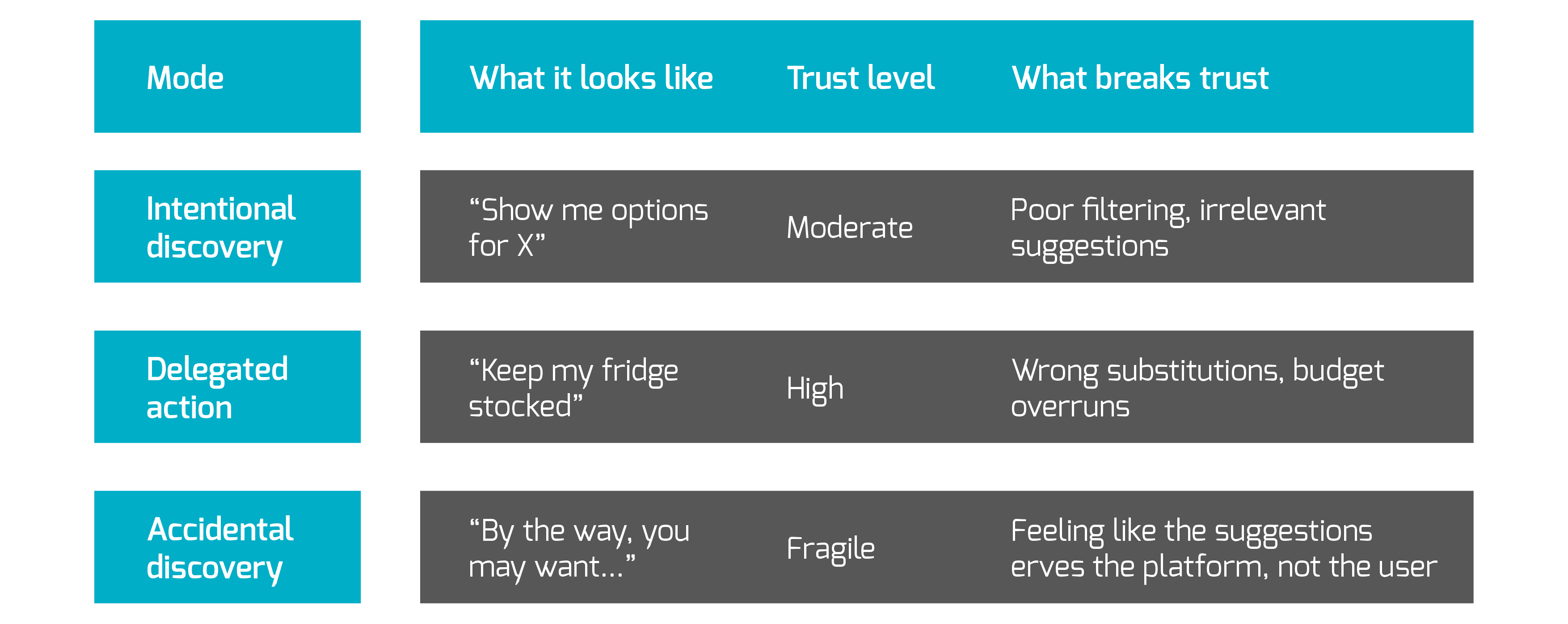

AI commerce is not one journey. It will split into three modes, and trust works differently in each.

“Help me choose.”

This is the low-risk adoption path. People use AI to narrow options, understand trade-offs, and feel confident faster.

Trust requirement: moderate, because verification is easy.

“Just do it for me.”

This is where behavior really changes. Weekly shop. Repeat replenishment. Known brands. The agent is authorized to act, not just suggest.

Trust requirement: high, because the cost of mistakes is real.

A suggestion or sponsored placement nudges a consumer into a funnel they did not start intentionally.

This is subtle, and it is where monetisation can become a trust stress test.

Trust requirement: fragile. Any suspicion of misalignment triggers verification loops.

Trust sounds abstract until you look at behavior.

At RealityMine, we focus on what people do across assistants, search, social, and retailer ecosystems. That is where trust shows up first.

In 2026, the companies that win will not just generate great answers. They will generate repeatable confidence.

If current momentum continues, a few outcomes feel likely:

AI commerce is shifting from browsing to delegation, but the question for 2026 isn't "can the agent shop?" but "will people let it?"

Key takeaways for businesses building in this space:

Design for delegation, not just recommendation. The interface and experience must explicitly support constraint-setting, approval thresholds, and easy unwinding of mistakes.

Treat alignment as your competitive moat. If users suspect your assistant prioritizes margin over fit, they'll keep it in research mode permanently. Transparency about how products are surfaced matters more than the sophistication of the recommendation engine.

Measure trust through behavior, not surveys. Track cross-checking patterns, basket edit rates, time-to-purchase compression, and repeat delegation frequency. These reveal whether confidence is building or eroding.

Expect trust to fragment by category. Consumers will delegate differently for groceries versus electronics, known brands versus discovery purchases, low-stakes versus high-stakes decisions. One-size-fits-all trust models won't work.

The companies that get trust right won't just win transactions—they'll win routines. And in commerce, routines are where the real value compounds.

Craig Parker is a Product Manager at RealityMine, where he leads the development of mobile app and web measurement solutions. With over a decade of experience spanning project delivery, UX optimization, and data-driven SaaS products, Craig specializes in turning behavioral insights into strategic value. He’s especially interested in how emerging technologies like AI are reshaping user journeys across the app ecosystem.